Arduino is an open hardware board which allow manufacturers to clone its boards, as long as they do not use their original trademark. Leveraging the license, a lot of chinese distributors in fact do clone them, selling the boards for a very low price, making it available anywhere. They do not always respect the trademark thing, but that's another history.

Arduino wasn't the first open hardware board, but it was the first that gained widespread traction. It's convenient, and people like conveniences. Their main target are not engineers, but even they began to use it.

A lot of people, even longtime users, think of it as a standalone product, but don't know exactly how different parts fit each other. Arduino is a company, a product, a board, an IDE, a library, a community, maybe something else also. But for the most part, Arduino is a library for a microcontroller (Atmel) which was on the market long before these boards were created.

The common microcontroller (Atmel) + library schema have some advantages. The library is a wrapper for a lot of features the microcontroller has. For instance, you do not need to know which register to poke to activate some port. Just choose the pin printed on the board, and the library knows what register is and what to move there. This approach has disavantages too. For instance, digitalWrite() is very slow. Some tests show it's even slower.

Another practical aspect is that this library can be used without an IDE. A lot of development boards use a modified Eclipse, which is complex, and bloated. Arduino IDE is simpler,Java-based, but do not offer much more than a very basic editor + a way to upload sketches. On the other hand, being open, some systems offer Arduino as commands to be used from the command line. For instance, OpenBSD has a devel/arduino package, which installs all the Arduino library environment, including its toolchain and a set of Makefiles that do the hard work for you. From nothing to the project uploaded to the board, it's a matter of 3 commands.

Following these lines, some manufacturers began to use the same formula: open hardware board(s) + library (and maybe an IDE). One of these manufacturers is LeafLabs and its Maple boards. These boards use a ARM microcontroller based on STM32F103 from STMicroelectronics, which has a Cortex-M3 core. It's a much more powerful microcontroller than the ATmega328 used by the most common Arduino board. Faster CPU (72 MHz against 16 MHz), more Flash (128 KiB instead of 32 Kib), and so on.

LeafLabs also developed a library called libmaple, which is the core of what gives these boards pretty much the same programming API as Arduino. For instance, you can use digitalWrite() to turn a pin on on a Maple board too. Although Maple does have a IDE, very similar in form as the Arduino one, it has the same limitations.

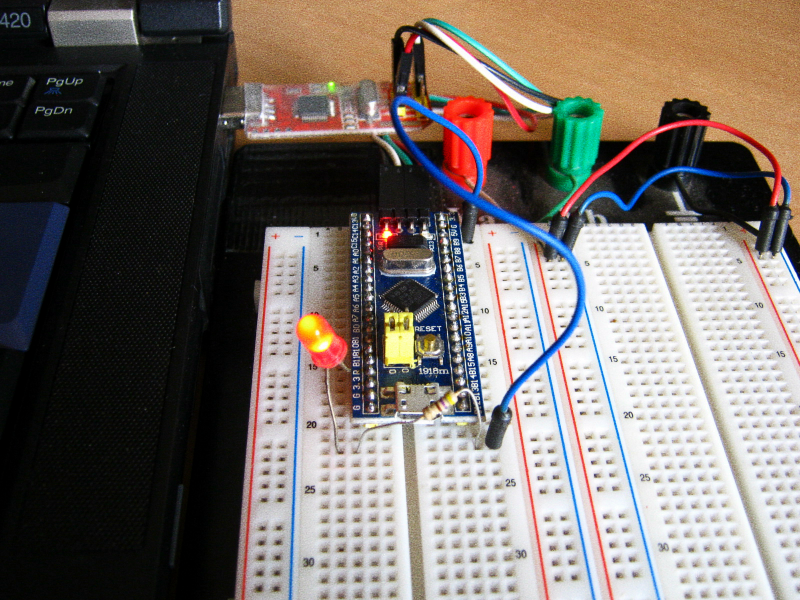

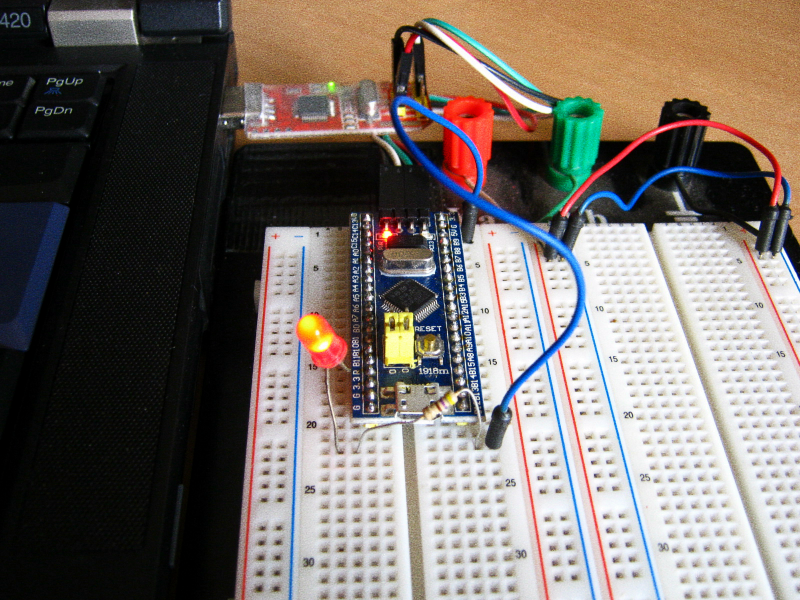

However, LeafLabs boards are now longer produced. As with Arduino, some chinese manufacturers do clone them, making it very affordable, but there is an even cheaper alternative: generic ARM development boards using the same microcontroller. They can be made to work just a Maple Mini, but costing almost half the price of a cloned one, with the same features.

Burning Maple bootloader into a generic ARM board

The steps below are used to use a generic ARM board as a Maple Mini. A ST-Link/V2 debugger/programmer is needed and OpenOCD must be installed. A generic debugger is also cheap.

- set pins (from debugger to the target board)

SWD are connected to IOCLK are connected to CLKVCC and GND to the obvious places

- press reset on the target (board) and hold

$ doas openocd -f /usr/local/share/openocd/scripts/interface/stlink-v2.cfg -f /usr/local/share/openocd/scripts/target/stm32f1x_stlink.cfg- release reset button

$ telnet localhost 4444- press reset and hold and enter command

> reset halt- release reset button

> dump_image gen.bin 0x08000000 0x1ffff- download Maple Mini firmware

> flash write_image erase maple_rev5_boot20.bin 0x08000000- unplug target board from debugger and plug it directly (in OpenBSD, you'll see it attach as ugen (LeafLabs Maple 003)

The 0x08000000 didn't just came out of nowhere. This information is from the page 34 of the available datasheet.

openocd dump_image command saves the original firmware in case something goes wrong. flash writes the just downloaded Maple Mini image to the board.

Using it

The repositories for libmaple are on GitHub. It's a matter of cloning the repository.

$ git clone https://github.com/leaflabs/libmaple.git

$ cd libmaple

$ cp main.cpp.example main.cpp

$ gmake BOARD=maple_mini

$ gmake BOARD=maple_mini upload

A proper toolchain for arm-none-eabi toolchain is needed, and its binaries must be on $PATH. The dfu-utils utility are used to upload the binary to the board.

As said earlier, LeafLabs discontinued its boards and its libraries. There is an effort in supporting the old library called Rambutan, and the first command above can just be replaced by this:

$ git clone https://github.com/rambutan32/librambutan.git

Modify main.cpp and restart the iteration.

more ...